On the 26th of May, we were thrilled to collaborate with the Media & Learning Association for a Meetup on the pertinent topic of “Accelerating XR workflows with Artificial Intelligence”.

Having been welcomed by Jeremy Nelson – Senior Director of Creative Studios at the University of Michigan – the Meetup began with a poll, aiming to gauge the extent of the audience’s experience with AI and XR as well as the ways in which they have, thus far, put the technologies to use. The results demonstrated strong similarities between the majority of the attendees.

In terms of technological familiarity with both AI and XR, most declared themselves either ‘not familiar’ or ‘somewhat familiar’, with a relatively even split between the two categories. Concerning use cases already explored, these tended to fall within the industries of entertainment, education, and healthcare. The most significant challenges listed included those of technical limitations, lack of skilled professionals, and a general perception of technological complexity.

ABOUT OUR SPEAKERS

Pete Stockley is a Learning Systems Specialist at Omnia Vocational School in Espoo, Finland.

Paul Melis is a Senior Visualisation Advisor at SURF in the Netherlands.

Orestis Spyrou is a Researcher in Extended Realities at the Social & Creative Technologies Lab of Wageningen University & Research, also in the Netherlands.

GENERATIVE AI IN XR – Pete Stockley

As Pete explains, Omnia Vocational School is an organisation in which the individual needs of students are prioritised. Although without doubt a fantastic educational opportunity, from a staff perspective, this often has the downside of resulting in limited time capacity for researching, trialling, and implementing new tools. At Omnia, however, they are fortunate. Considerable attention remains paid towards AI and XR to the extent that both AI and XR workgroups are actively in place. These aim to deepen understanding of generative AI tools, create no-code learning experiences, meet the ever-adapting needs of teachers in vocational training, and offer students the opportunity to actively explore AI and XR, both in content and tools.

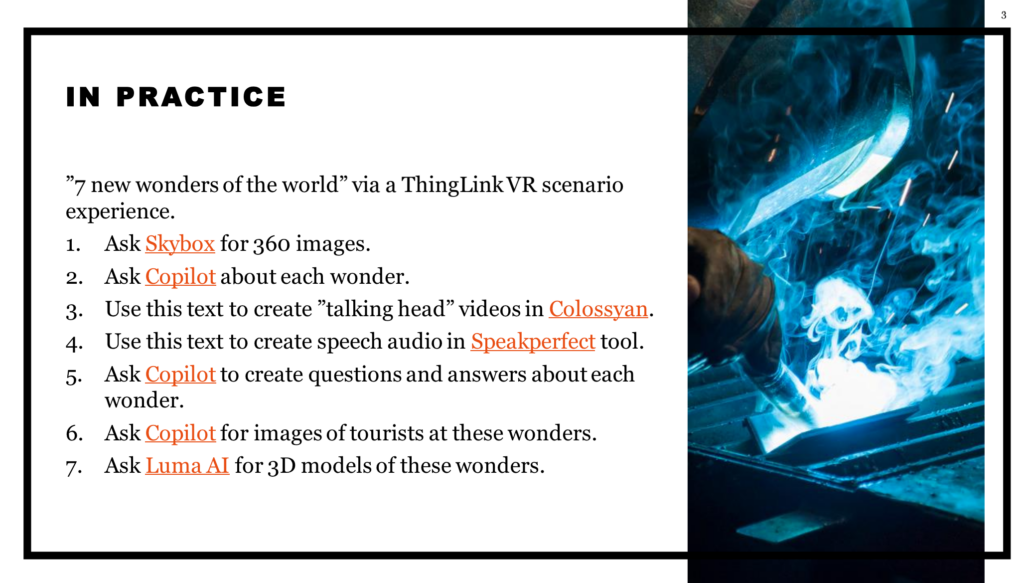

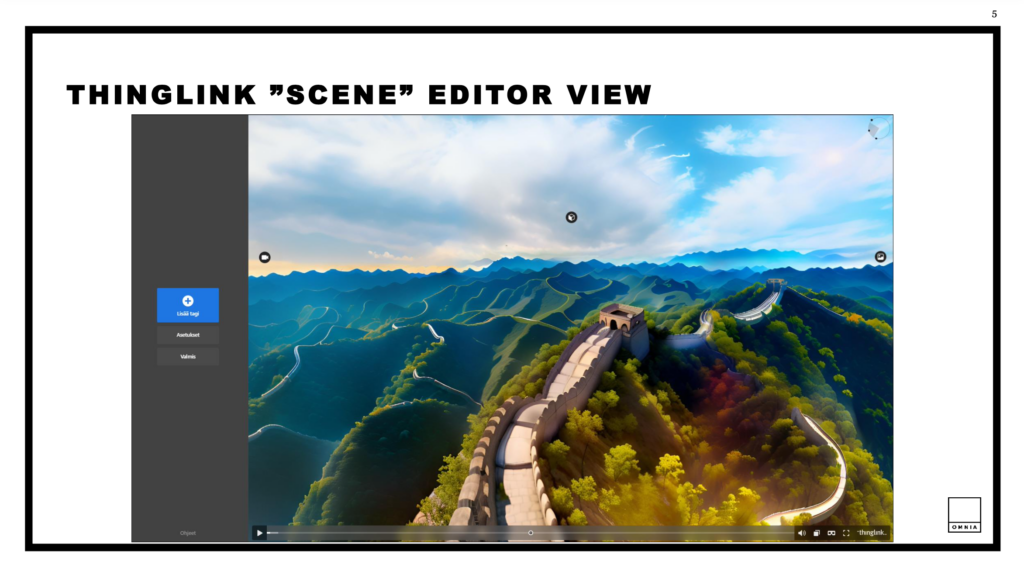

What, in practice, does this approach mean at Omnia? To demonstrate, Pete provides the example of the ‘7 New Wonders of the World’ experience which he created in collaboration with a colleague from the school’s department of tourism. In this, all seven locations known as the world’s seven wonders were AI-generated using ThingLink – a Finnish-designed platform which facilitates the simple creation of interactive and immersive experiences. Although this generative AI function of Thinglink was used to fabricate the locations which students would explore, the information which they would read while doing so was instead generated through Microsoft Copilot. The image below provides a clear breakdown of precisely which AI tools were employed, so please feel welcome to take a look and try them out for yourselves!

In the case of this particular experience, amongst the primary reasons for which ThingLink was chosen was its functionality of allowing interactive tags to be added to 3D models, images, and videos, as well as 360-degree videos. While exploring the seven virtual environments, students could touch these tags to receive information regarding specific aspects of the landscape before them. Although this informative text was AI-generated, it is important to note that all text in the experience had first been proof-read by educators to ensure their accuracy. While this method, of course, still requires a certain time commitment from educational staff, the difference in terms of time required for this approach in comparison with that of writing every text from scratch is considerable.

As Pete explains, no tool is perfect, yet this is not necessarily something with which to be too greatly concerned. Owing to their AI-generated nature, the environments generated by ThingLink are not wholly accurate, especially in their smaller details. This cannot be said to be acceptable for all use cases yet, in this case, it presents little cause for worry. What, as Pete stresses, these environments are excellent for is allowing both students and teachers to gain active, hands-on experience within the realms of AI and XR while enhancing the educational experience. The information absorbed by the students via the interactive tagged hotspots is, after all, fully accurate.

From his experience of developing this Seven Wonders of the World VR (as well as browser) experience, Pete has derived several takeaways, amongst the most significant of which include:

- Ideally, work in small teams.

- Look for each tool’s possibilities and restrictions & discover these hands-on whenever possible!

- Test results in authentic learning scenarios.

- Ask for feedback from peers and students, clarifying whether the tool provided added value.

- Share the knowledge!

XR & AI at SURF – Paul Melis

Following Pete Stockley’s in-depth presentation of his AI and XR educational case study, we were delighted also to welcome Paul Melis from SURF to present his insights into the field from a technically-oriented perspective.

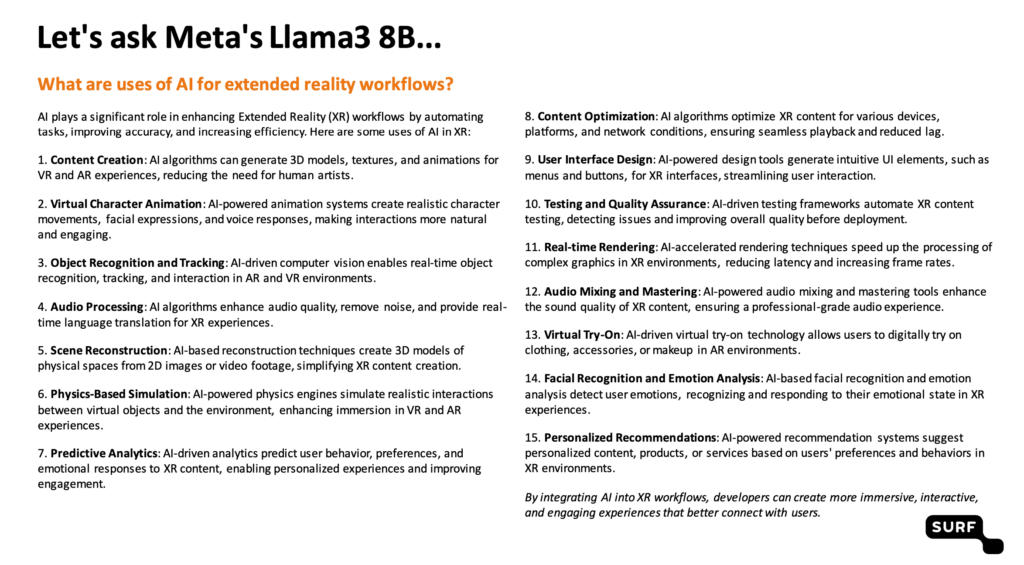

Having first provided some background concerning SURF as a public values-oriented organisation and having clarified its central role within the education sector as opposed to one directly involved in teaching, Paul proceeds to show the results of the prompt which he gave to Meta’s Llama3 8B AI: “what are the uses of AI for extended reality workflows?”. What these results are can be seen in the image below and, as Paul acknowledges, several more could likely be found.

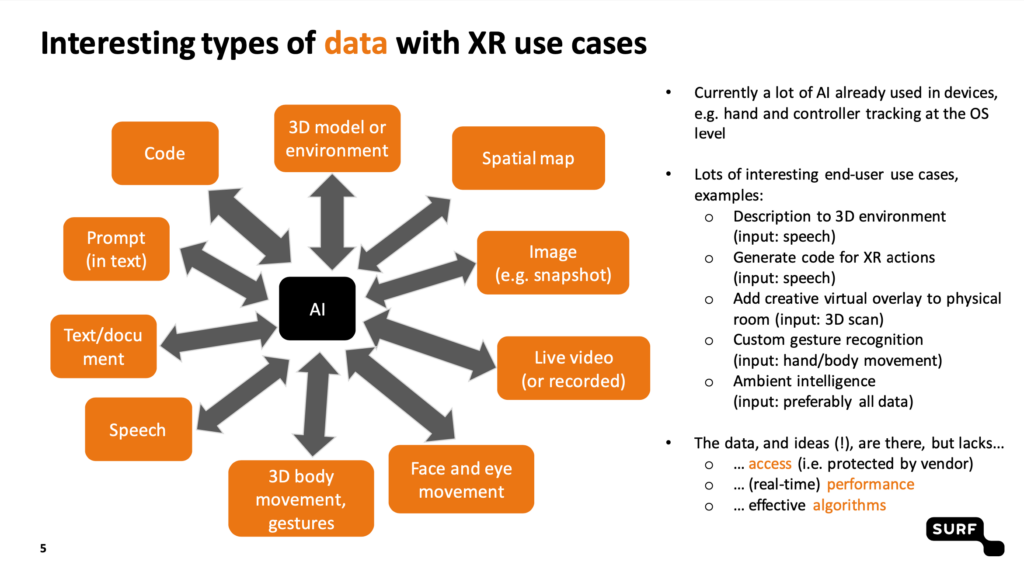

Taking data as his starting point, he then turns to discuss the various types of data which are generated through XR technology’s use, both before and during user interaction. What he reminds us next is important to bear in mind: XR headsets are already making use of AI on an operational level, whether this is openly promoted or not. In this way, not only are they gathering complex data, but they are also putting it to complex uses.

Amongst the many reasons for this data’s complexity is that of its frequent inaccessibility. Indeed, even when accessible, it is seldom able to be easily handled owing to issues of latency and the length of performance processing times. Moreover, it is often the case that existing algorithms are not yet sufficient to perform certain fundamental tasks. Despite this complexity, there are, however, potential means of easing the situation.

Considering what is currently possible concerning the processing of AI on XR devices, Paul now raises for discussion the possibility of processing data locally rather than through the cloud. This method is especially beneficial when it comes, once again, to latency as well as when an application has no need for a network connection. From ethical and privacy perspectives too, this method of local processing has potential advantages. The question is, when will it become truly possible on any scale?

To give an impression of the present feasibility of running AI locally, Paul clarifies that the AI query with which he opened his presentation was, indeed, run locally. Problematically, doing so occupies approximately half of his computer’s GPU memory. If he were to run this same query on a Quest 3 headset, this would consume almost all of the GPU memory available on the device. Despite this workflow’s glaring present-day limitations, Paul does, however, provide a silver lining in the form of noting Qualcomm’s active impetus towards developing a solution. If successful, this has great potential.

Moving now to demonstrate another bid to increase AI’s capacity within the XR realm, Paul introduces us to SURF’s still under development in-house generative AI platform, WillMa. Developed and maintained by SURF’s Machine Learning team, WillMa provides a selection of open-source AI models from Hugging Face and offers the following functions:

- Conversational/instructional/coding LLMs

- Text-to-speech & speech-to-text (Dutch)

- Image generation (StableDiffusion)

- In the future, the possibility for models uploaded by users

In SURF’s case, hosting this platform in-house allows them the benefits of digital sovereignty, the opportunity to develop in accordance with their own community’s goals, the possibility of integrating with other SURF infrastructure, and an easy means of experimentation.

To put SURF’s current work into context within the realms of AI and XR, Paul concludes his presentation by providing the example of their ongoing project concerning Digital Heritage in XR. Here, within a virtual or mixed reality environment, 3D scans of objects can be displayed and interacted with by users who, after picking up the digital objects using hand tracking, are able to pose questions regarding both the objects specifically and their broader contexts. Such questions are then answered by the WillMa generative AI which formulates a response based upon previously SURF-entered metadata.

Unlocking the Future of XR Entertainment by Pioneering 3D AI Empowered Copilots – Orestis Spyrou

Orestis begins his presentation not with his slides, but instead with a live demo of the generative AI mixed reality application ‘Digibroer’ on the Quest 3. Viewing this app from the perspective of Orestis himself in the headset, we are immediately greeted by the sight of a digital avatar Orestis standing in front of his real background. This is made possible through passthrough. As the real Orestis begins to speak with his avatar, the avatar replies. Although, at first, it responds with great enthusiasm – if not always the most applicable sentiments – as the interaction proceeds and Orestis poses questions regarding the topic of digital twins in agriculture, the avatar’s accuracy and understanding improves greatly.

Following this highly memorable demo, Orestis proceeds by contextualising this app created by himself and his colleagues at Wageningen University & Research. As he elaborates, the principal motivation behind its development was the desire to create an educator with 24/7 availability, covering not only a vast range of topics, but also answering queries not easily able to be addressed through traditional book learning.

When discussing what he believes to be immersive technologies’ most significant benefits within the education sector, Orestis cites what he considers their highly intuitive nature as well as their ability to transport users into locations and scenarios otherwise beyond reach. Moreover, the involvement of AI brings the additional benefits of increased personalisation and interactivity. As he acknowledges, when it comes to XR and AI, there remain numerous technical challenges such as interoperability, yet there is much about which to be positive. Upon this, we ought to concentrate!

Q&A

During the Q&A which followed, several questions were asked regarding SURF’s WillMa platform as well as the technicalities of both Pete and Orestis’ apps, yet one question elicited particularly varied responses from our speakers:

‘Did AI add value and speed to your workflows? Did it change the way you are working in any way? Do we all now need to become prompt engineers or can we simply dive in?’

In his response, Paul expressed his mixed feelings. For now at least, he seldom uses AI tooling in his work, having found currently available tools insufficient to meet his needs. How quickly this will change to the extent that he believes necessary for him to begin to use them frequently remains to be seen, yet he concludes by remarking upon the astonishing speed of their development at present.

By contrast, even at present, Pete finds there to be considerable value in AI and XR’s use, both independently and collaboratively. Although their effective use will come about only with time, effort, and a willingness to accept that things may not work perfectly on the first attempt, the results are, for him, ultimately more than worthwhile. Moreover, once your familiarity with the technology increases through its use, you will likely make up any time once considered lost.

When answering this question, Orestis focussed particularly upon the final section, namely that of whether it is necessary to become a prompt engineer yourself. Regarding this, he raised the possibility of requesting the assistance of Chat GPT when writing prompts, asking the LLM to aid with phrasing and refining.

On behalf of both ourselves at XR ERA and the Media & Learning Team, we would like to say a big thank you to Paul, Pete, and Orestis for joining us to share their thoughts and insights!

In July, there will be no Meetup owing to a short XR ERA summer vacation but we’ll be back in August as usual and will keep you fully up to date with what we have planned over the coming weeks.